Artificial Intelligence (AI) has been a buzzword in the tech industry for decades, but recent advancements in machine learning and deep learning have propelled this field to new heights. The potential applications of AI are vast and varied, ranging from personalized healthcare to self-driving cars. As the field of AI continues to evolve at a breakneck pace, businesses and governments around the world are investing heavily in research and development to leverage this powerful technology.

Defining artificial intelligence

There is no single, straightforward, agreed-upon definition for AI since there are so many ways it can help, enhance, and automate human tasks, including the ability of artificial intelligence (AI) to learn and act on its own. Even AI researchers have no exact definition of AI.

Gartner defines artificial intelligence as applying advanced analysis and logic-based techniques to interpret events, automate decisions, and take action. The idea behind AI is to utilize a set of techniques and technologies to enable machines to perform those tasks that mimic human cognitive abilities, such as understanding natural language, recognizing objects and patterns, making decisions, and learning from experience. This definition acknowledges that AI generally involves probabilistic analysis, the act of combining probability and logic to assign a value to uncertainty.

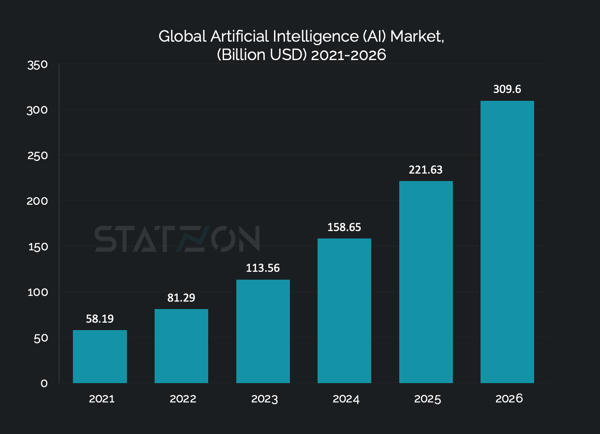

Despite the lack of a universally agreed-upon definition, AI adoption has been steadily increasing across numerous industries. As a result, the global AI market has been expanding rapidly and is expected to maintain this trend in the foreseeable future. In 2021, the AI market was worth 58.2 billion USD, and it is projected to reach 113.6 billion USD by 2023 and 309.6 billion USD by 2026, indicating a CAGR of approximately 39%.

Source: Statzon/ MarketsandMarkets

Source: Statzon/ MarketsandMarkets

Understanding how artificial intelligence works

AI works by combining large sets of data and intelligent algorithms, which are instructions that allow machines to learn from data and make decisions or predictions based on those data. Each time an AI system goes through a cycle of data processing, it evaluates its own performance and develops new expertise. This is how AI trains itself to get smarter. Once the algorithm has been trained, it can be used to form predictions or conclusions from new data.

Major subsets of artificial intelligence

Several subsets of AI are empowering AI capabilities to learn, recognize patterns, make predictions, and others. Some of the most important AI terms to know are:

Machine Learning

Machine learning is the technology behind AI’s ability to enable algorithms to learn from data and make predictions or decisions without being explicitly programmed. Through machine learning, algorithms detect patterns and learn how to make predictions and recommendations by processing data and experiences rather than by receiving explicit instructions. This allows computer systems, programs, or applications to learn automatically and develop better results based on experience without being programmed to do so.

The three primary categories of machine learning are supervised, unsupervised, and reinforcement learning.

Supervised machine learning

In supervised learning, algorithms use data and feedback labeled by humans to learn the relationship of given inputs to a given output. In other words, the desired output is known for each input. The algorithm learns to predict the correct output for new, unseen inputs based on the patterns it has learned from the labeled data.

Supervised learning algorithms are generally used for solving classification and regression problems. Gartner predicts that supervised learning will remain the most utilized machine learning in IT businesses. Some of the most popular uses of supervised learning are predicting the weather, sales forecasting, and analyzing stock prices.

Unsupervised learning

With supervised learning, humans need to guide the system by labeling training data and helping it learn. But with unsupervised learning, the machine searches the data for patterns without any input from users. In other words, the algorithm explores input data without being given an explicit variable. We use unsupervised learning when we don’t know how to classify a big amount of data. Thus, we want the algorithm to recognize patterns and group the data for us.

The main applications of unsupervised learning include clustering, visualization, finding association rules, and anomaly detection. Some examples of real-world applications include fraud detection, creating customer groups based on purchase behavior, e-commerce user-specific product recommendations, and genome visualization in genomics applications.

Reinforcement learning

Reinforcement learning is the most advanced machine learning type, the closest one to how humans learn. In reinforcement learning, the algorithm, or agent, learns to make decisions by interacting with its environment and receiving feedback in the form of positive or negative rewards for each action taken. Gartner points out that a lot of ML platforms do not have reinforcement learning abilities since they require higher power and more complex computing capacity than most businesses have access to.

The application of reinforcement learning is versatile and can be used across different types of tasks and scenarios. Some applications of it:

Game playing: reinforcement learning algorithms have been applied to games like Go, chess, and Atari. The agent figures out what decisions to make in order to get the highest score or the best chance of winning.

Robotics: to train robots to perform tasks such as grasping objects, navigating in a new environment, and even walking. The agent learns by trial and error, receiving rewards for successful actions and penalties for unsuccessful ones.

Autonomous driving: to enable autonomous vehicles to make decisions such as lane changing, acceleration, and braking. The agent learns to maximize safety and efficiency by interacting with the environment.

Deep Learning

Deep learning is a subset of machine learning that can handle a greater range of data with less data pre-processing done by humans. It usually produces more precise results than the traditional machine learning methods, provided it has a bigger source of data.

In deep learning, interconnected layers of software known as “neurons” form a neural network to analyze and solve problems by extracting knowledge from raw data and learning increasingly complex features of the data at each layer. The network can then make a decision about the data, learn if the decision is correct, and use what it has learned to make decisions about new data. In simple terms, deep learning is like teaching a computer to identify patterns and arrive at decisions based on those patterns, much like how our brains operate.

Deep learning reduces error rates in image identification by 41%, facial recognition by 27%, and voice recognition by 25% when compared to classical machine learning.

Four major models of deep learning are:

Recurrent Neural Networks (RNNs): RNNs have the ability to learn data sequences and output a number or another sequence. It is used in language translation apps, generates captions for images, and predicts stock prices.

Generative Adversarial Networks (GANs) are utilized to generate lifelike images and videos, as well as for data augmentation, which is the process of generating synthetic data to train models.

Transformer: a type of deep learning model that is very good at understanding languages. Due to their ability to pick up deep connections between data, this model is also effective for other sequential data, such as videos and time series. Chatbots are an excellent example of how transformer models are being used in AI.

Natural Language Processing

Natural Language Processing (NLP) is a critical piece of the AI process that allows computers to recognize, analyze, interpret, and comprehend human language in the form of text, speech, and even emojis. NLP enables computers to interact with people in their own language, allowing them to read text, listen to speech, understand it, measure sentiment, and determine which parts are important.

NLP is one important technology behind chatbots, language translator apps, and targeted advertising. Many companies are also applying NLP techniques to analyze social media posts and figure out what customers think about their products.

Computer Vision

Computer vision is a branch of artificial intelligence that teaches machines to comprehend and make sense of the visual environment. You might be familiar with Captchas, those puzzles you come across online that ask you to identify cars, crosswalks, bikes, and mountains. With the help of humans, AI systems can learn to spot these items in images. Computer vision requires different technology and resources than regular machine learning programs. It is also a lot more accurate at identifying organic objects. It plays a critical role in facilitating the navigation and obstacle avoidance of self-driving cars and forms the basis for application development, such as facial recognition systems for security purposes.

The latest AI megatrends you need to know

Generative AI

ChatGPT has taken the world by the storm since November last year. ChatGPT is an AI-powered chatbot developed by OpenAI and launched in November 2022. It is built on top of OpenAI's previous creation, the GPT-3.5 and GPT-4 families of large language models (LLMs). The generative artificial intelligence application that you can chat to like a very intelligent human and instruct to write things previously done by humans has changed the way people do their work.

In simple terms, generative AI is a subset of artificial intelligence that focuses on creating and generating new content, such as text, images, and audio, based on input data. The versatility of this technology is evident in its various applications, ranging from language translation and image generation to personalized content creation and music composition. Its impact on the content creation industry has been revolutionary.

Large Language Model (LLM)

A large language model (LLM), like chat GPT, is a deep learning model that is trained on massive amounts of text data to learn the patterns and relationships in natural language. The goal of an LLM is to be able to generate coherent and fluent language that is semantically meaningful and grammatically correct. LLMs achieve this by using a type of neural network architecture called Transformers, which was first introduced in a paper by Vaswani et al. in 2017.

The Transformer architecture is designed to handle sequential data, like natural language, by processing it in parallel. This is accomplished using a mechanism called self-attention, which allows the model to attend to various parts of the input sequence when generating the output sequence.

During training, an LLM is presented with a large corpus of text data and is trained to predict the next word in a sequence of words, given the preceding words. This is known as a language modeling task. By doing this repeatedly over a large dataset, the LLM can learn the statistical patterns and relationships in natural language.

Once an LLM has been trained, it can be used for a wide range of natural language processing tasks, including language translation, text generation, and sentiment analysis, among others.

Generative AI market size and potential

There are many other generative AI tools and models besides ChatGPT. There is DALL-E, another product of OpenAI specifically designed for image generation. Introduced in 2021, DALL-E is a generative model that can create images from textual descriptions. It will create an image based on your prompt no matter how absurd it sounds, such as a bird playing chess or an avocado sitting on a sofa.

Goldman Sachs Research suggests that breakthroughs in generative artificial intelligence could potentially bring significant changes to the global economy. It could lead to a 7% increase in global GDP (nearly USD 7 trillion) and boost productivity growth by 1.5 percentage points over a 10-year period. This new wave of AI systems could also significantly impact employment markets worldwide, with around 300 million full-time jobs exposed to automation. However, not all automated work will necessarily result in job losses. The purpose of AI is more to supplement the workforce rather than to replace them.

The market size for generative AI itself is estimated at around USD 11 billion in 2023 and is projected to grow to over USD 50 billion by 2026, according to the research firm MarketsandMarkets.

Generative AI application in business

The impact of generative AI is particularly notable in the marketing and advertising industry. By analyzing customer data and preferences, generative AI like chat GPT, Jasper, and Copysmith, can generate content that is specifically tailored to attract and convert potential customers. This includes personalized emails, customized social media ads, and even individualized product recommendations on e-commerce sites.

In the finance sector, there’s generative AI that could automate tasks such as financial analysis or personalized investment portfolio creation, or investment recommendation based on customer data and preferences. Bloomberg just released BloombergGPT, a new finance focused LLM that has been trained on enormous amounts of financial data to help with risk assessments, gauge financial sentiment, and automate some of the accounting processes.

Generative AI is also coming to the healthcare sector. One of the earliest large-scale uses of generative AI in healthcare is being rolled out at the University of Kansas Health System. The aim is to create a platform powered by generative AI to create summaries of medical conversations from recorded audio during patient visits. There’s also Atropos Health and Syntegra that have collaborated to raise funds and develop a system capable of generating realistic synthetic medical images. This technology has the potential to aid researchers in gaining insights into various diseases.

And there’s Runway, a New York based company currently secured USD 50 million funding at USD 500 million valuation. Famous among designers, artists, and developers, Runway provides a platform that allows users to generate full videos from a text prompt. This is especially useful for people who have limited skills in programming.

Using a variety of machine learning algorithms, Runway can be used to create unique and dynamic content. Users can design and train their own models or use pre-built models and templates to create new projects. Fashion designers can use Runway to generate new clothing designs based on specific parameters, such as color, texture, and fabric. Artists are using Runway to create digital art, video, music, or 3d animation. Runway has also been used by enterprise customers like CBS’s Late Show with Stephen Colbert and the visual effects team for Hollywood hit Everything Everywhere All at Once for video editing, in particular for rotoscoping —a process that typically could take the team five hours for one shot by hand, compared to five minutes with the Runway’s AI tool.

Intelligent automation

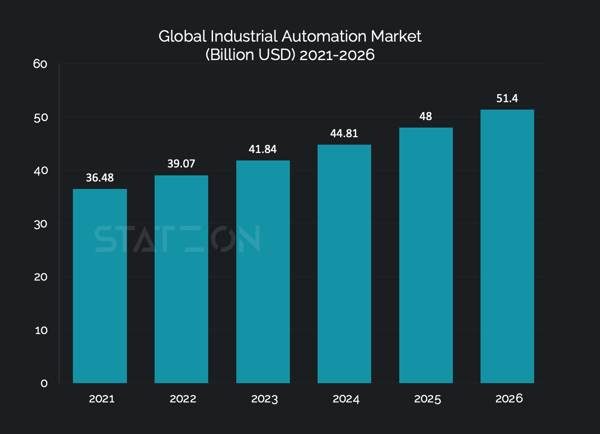

Automation and AI have been around for quite some time, but their prevalence is continuing to grow rapidly. The global industrial automation industry was valued at USD 39 billion in 2022 and is projected to grow to USD 51 billion by 2026, based on data provided by the research firm MarketsandMarkets. Recent advancements have expanded the capabilities of automation and AI, making them more prevalent in various industries.

Source: Statzon/ MarketsandMarkets

Artificial intelligence and robotics

Factory automation relied heavily on traditional industrial robots that are programmed to do repetitive tasks, but these robots would struggle when faced with more complex tasks. Application-specific, AI-enabled products have emerged in recent years with the goal of streamlining certain tasks. Collaborative robots (cobots), for example, have become a popular choice in factory automation due to their ability to perform more complex tasks than traditional industrial robots

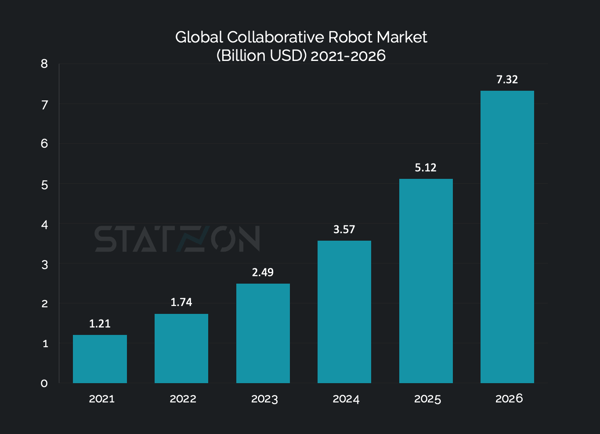

Equipped with computer vision and machine learning applied to their operating systems robots, cobots can be programmed to pick up and handle objects using neural networks and other machine-learning models. Traditional robots would require predetermined positioning and trajectories to perform the same tasks. Cobots are currently in high demand with the market accelerating at an amazing speed of 43% CAGR, based on MarketsandMarkets estimates.

Source: Statzon/ MarketsandMarkets

Next generation cobots can pick up items from non-fixed locations, using 3D vision and laser triangulation. The more objects they handle, the more efficient they become at their task, thanks to the continuous iterative refinements of the machine-learning algorithm.

AI usage in industrial automation

Robotics is just one subset of industrial automation. Industrial equipment undergoing digital transformation generates vast amounts of data, which is often overlooked. However, by using AI to analyze this data, manufacturers can gain valuable insights into their operations, providing them with deep visibility.

Siemens, for example, collected 11 million data sets from their factories at a relatively low cost. With this data, they have begun to implement effective predictive maintenance. AI algorithms can be trained to analyze large amounts of data from sensors and machines to predict when maintenance is needed, reducing downtime and increasing efficiency. AI can also analyze the data to impose quality control, where AI can identify defects in products with high accuracy, allowing for faster detection and correction of issues. AI also helps eliminate blind spots OEMs don’t even know they have, reducing uncertainty and providing a better understanding of the actual production risks involved. AI also helps identify blind spots that manufacturers may not be aware of, thereby reducing uncertainties and providing a better understanding of the production risks involved.

Intelligent Document Processing

Intelligent Document Processing (IDP) is a technology that uses Artificial Intelligence (AI) and Machine Learning (ML) to automate the processing of unstructured data contained within documents. It can be used to extract relevant data from documents such as invoices, receipts, and contracts and convert it into structured data that can be easily analyzed and processed by computer systems.

IDP technology can be used to automate a wide range of document processing tasks, including data entry, invoice processing, and contract analysis. By integrating IDP with other emerging technologies such as RPA, NLP, and OCR, new applications such as automatic summarization, translation, and question-answering can be developed.

Despite the trend towards digital transformation, paper and electronic documents remain relevant and valuable, and nearly every organization faces the common challenge of accessing data trapped in analog and unstructured formats. Many organizations process documents and images manually, or separate data entry and processing from core operations. However, automation solutions like IDP can help with this challenge.

While IDP technology is becoming more accurate over the long term, human input is still required to configure the platform, train the algorithms, monitor outputs and performance, and handle exceptions. Companies deploying IDP will still need a significant human workforce to ensure that scaled-up deployments continue to perform well.

One example of IDP utilization is when a global investment management firm had only one year to comply with customer regulations that required it to extract and reconcile co-applicant account information across more than one million pages. The firm implemented IDP and was able to double its processing speed with half as much effort for a fourfold increase in document throughput within four weeks.

According to an upcoming McKinsey Global Executives Survey, 70% of companies are testing automation in at least one business area. Intelligent document management and processing tools, such as OCR, are the most commonly used automation solutions beyond the initial testing phase.

Autonomous Driving

Autonomous driving is an area where AI has seen significant development in recent years. Autonomous vehicles (AVs) come equipped with a variety of sensors, including cameras, radar, and LIDAR, to help them understand their surroundings and plan their paths. These sensors generate a significant amount of data that requires instant processing capabilities. AV systems rely heavily on AI, in the form of machine learning and deep learning, to efficiently process vast amounts of data and to train and validate their autonomous driving systems.

Companies are increasingly turning to deep learning to speed up the development of their AV programs. Instead of manually programming rules for AVs to follow, such as "stop if you see red," DNNs allow AVs to learn how to navigate their surroundings by analyzing sensor data. These algorithms are modeled after the human brain, meaning they learn through experience. The algorithm's execution improves with more experience.

Vehicles’ level of autonomy

Google's Waymo project is an illustration of a nearly fully self-driving car that still requires a human driver to intervene in exceptional circumstances. While it cannot be considered entirely self-driving, it has a high degree of autonomy and is capable of driving itself in optimal conditions.

Many new vehicles today possess some degree of self-driving capabilities, such as hands-free steering, adaptive cruise control, and lane-centering steering. These features allow for semi-autonomous driving, where the driver is still required to pay attention, but the car can assist in maintaining distance, staying centered in a lane, and steering without the driver's hands on the wheel.

The dream is to get to Level 5 of autonomous driving. As explained by The Society of Automotive Engineers (SAE), Level 5 is a fully automated car where, at this level, the vehicles perform all driving tasks under all conditions with zero human attention or intervention needed. Waymo is a good example of an autonomous car at Level 4 where the car can operate in pre-mapped suburban areas with low-to-moderate traffic without human intervention but still has a driver seat for human to take over if needed.

It is important to note Level 4 cars are still far from widespread adoption, although Mercedes has announced that Level 4 autonomy may be possible by the end of the decade. Mercedes-Benz became the first automaker in the US certified to sell vehicles with Level 3 autonomous technology (automated driving function takes over most of the driving tasks. However, a driver is still required and must be ready to take control of the vehicle at all times. The system will be available on 2024 Mercedes-Benz EQS and S-Class models and is currently only approved for use in Nevada.

Autonomous vehicles market potential

The promise of a fully automated car in the near future appears to be untrue. Gartner declared that the development of autonomous vehicles has stalled and reached the state of “Through of Disillusionment”, the stage following the peak of inflated expectations, characterized by a period of disillusionment as it fails to meet the high expectations that were set for it. Some companies have abandoned their autonomous research projects, as seen with Ford and Volkswagen's decision to shut down Argo AI, a highly regarded self-driving car developer in which the two companies had invested billions of dollars.

But even Gartner believes that most technology will eventually move past the “Trough of Disillusionment” and towards wider adoption. As the technical, regulatory, and public perception challenges are addressed, autonomous vehicles will become more widely accepted and integrated into society.

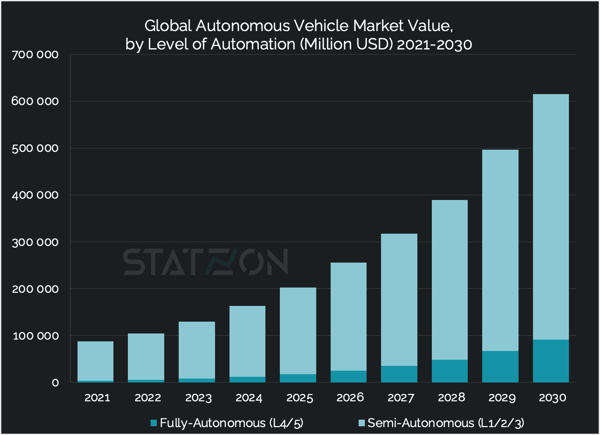

Despite some setbacks, the potential of autonomous driving to transform mobility is widely acknowledged. Latest report from McKinsey forecasted that autonomous driving could create USD 300 - 400 billion in revenue by 2035 and the total value of the autonomous driving components (camera, Lidar, radar, domain control, and safety sensors) market could reach USD 55 billion to USD 80 billion by 2030. Next Move Strategy Consulting came with a more optimistic view of the market, projecting it could reach USD 600 billion by 2030. However, their calculations include Level 1 automation (cars with adaptive cruise control) that has become ubiquitous today, which is not accounted for in McKinsey's estimates. The firm categorized Level 1 to Level 3 cars as semi-autonomous vehicles and Level 4 and Level 5 cars as fully autonomous vehicles.

Source: Statzon/ Next Move Strategy Consulting

Source: Statzon/ Next Move Strategy Consulting

McKinsey survey shows customers are excited about autonomous driving technology and willing to pay for it. Auto companies may limit commercializing advanced automated driving systems to the premium market only due to steep up-front costs. Level 3 and Level 4 software and hardware could cost USD 5 000 or more per vehicle during early rollout, with development and validation costs exceeding USD 1 billion. High sticker prices may limit adoption, making Level 2+ systems more commercially viable.

Several automakers currently offer Level 2+ systems, with more vehicle launches planned in the coming years. If equipped with sufficient sensors and computing power, the technology developed for Level 2+ systems could also contribute to the development of Level 3 systems.

Sources: Statzon, McKinsey (1), McKinsey (2), Goldman Sachs, Business Insider, WSJ, S&P Global, ING, Automate, USNews